Software Architecture

This research focuses on the problem of autonomous flight of unmanned aerial vehicles, in particular Micro Aerial Vehicles (MAVs), in indoor and outdoor scenarios where GPS signal is unreliable or inaccessible. Autonomous flight is a desirable capability in applications where tedious and repetitive tasks have to be performed, thus freeing the operator to focus on other tasks. From the above, in this research we aim at investigating novel ways in which different sensors on board the vehicle, e.g., cameras, accelerometers, gyros, compass, etc, can be fused in order to estimate the vehicle's position in real time, which can be exploited in order to develop autonomous navigation modules for autonomous flight in complex GPS-denied scenarios.

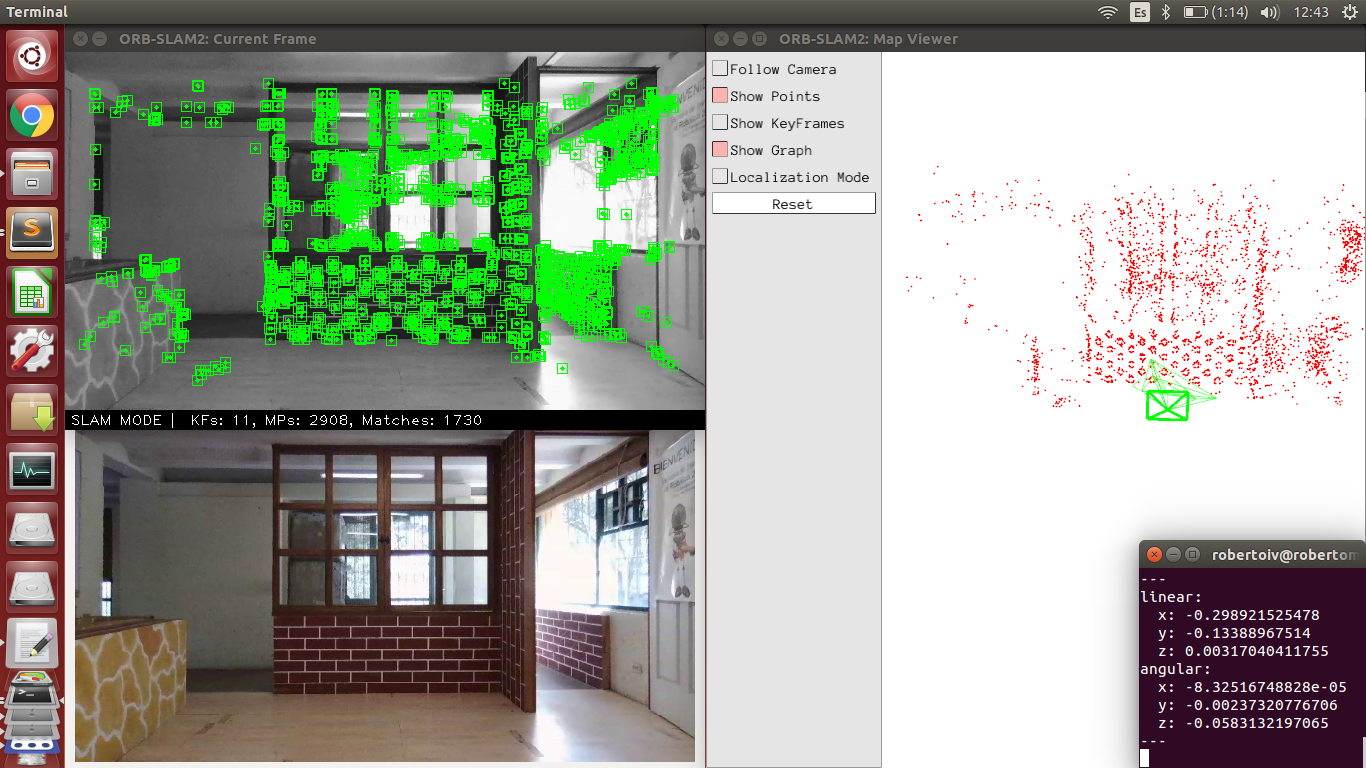

We also aim at exploring different visual sensing mechanisms taking advantage of the availability of sophisticated cameras such as time of flight cameras, event cameras, stereo and multi-rig cameras. The ultimate goal is that of exploiting the implicit richness of visual data aiming at extracting useful information of the observed scene such as semantic meaning, context understanding and situation awareness for autonomous navigation, e.g. the vehicle realises it is flying to close to buildings, to people, in risky scenarios, etc. This research involves key topics such as Visual Simultaneous Localisation and Mapping, sensor fusion, control applications, computer vision in real time, high performance computing and motion planning.